Generative A.I is already in multiple industries where it can be trained on scraping accessible data, and the next step is this spreading further into film, television and commercials.

Artificial intelligence has been heralded by OpenAI as something which “benefits all humanity”. However, there are concerns pertainting to jobs being replaced and how ethical A.I. models are while being trained on human-made works.

Here are the shortcut links to each section in the article:

- Generative A.I. in film and TV

- How does Generative A.I. work?

- OpenAI’s plan for Generative A.I. in Hollywood

- A recent ‘Willy Wonka’ event using Generative A.I.

- Art communities being affected

- What are the Copyright Laws for Generative A.I.?

- How could Generative A.I become ethical?

- Employment Law about A.I.

- Is there room for Generative A.I to co-exist with human-made work in industries?

Generative A.I. in film and TV

At the moment Generative A.I. isn’t as prominent in film and TV yet, however it’s at the beginning stages of it being brought in. As well as this already flooding the arts and entertainment industry, art communities, social media platforms and various other sites and programs.

Studios have been looking to use it for cost-cutting measures, most noticeably in the intro for Marvel Studios’ series Secret Invasion, as well as generative A.I. imagery in the latest film called Late Night With the Devil, both which caused uproar online about its use.

Cameron and Colin Cairns; who are the directors for Late Night With the Devil previously didn’t disclose the use of Generative A.I. until an early reviewer spotted the imagery in the movie which prompted Variety to ask them about it.

Hollywood union strikes

These projects were also made before two lengthy Hollywood union strikes by WGA writers and SAG-AFTRA performers; where a large concern has been growing about A.I. replacing peoples’ jobs. LNWTD was released publicly on March 19, 2024, therefore they could have had enough time to remove those three images to pay for designers to fully create original works instead of just editing Gen A.I. content.

When the Hollywood strikes ended in November 2023; a billion dollar deal was reached to bring in safeguards to protect performers against being pressured into the signing the use of A.I. digital replicas of their likeness, where the performer could have ended up in another film entirely as an A.I. replica without their knowledge of it.

There was also the WGA deal reached in September 2023; to have full disclosure of content written by A.I. and safeguards for writers against A.I. usage. Although, this wasn’t focused on protections against Generative A.I replacing visual arts jobs.

British Film Designers Guild and The Guild in the UK, Art Directors Guild (ADG), The Animation Guild in the US and any other art guild around the world would need to go on strike to reach a deal to protect artists from the exploitation of Generative A.I. in film, television and commercials.

In 2023, The Animation Guild (TAG) set up an A.I. Taskforce ‘to examine the impact of machine learning and AI on the animation industry and its workers’.

One of the findings from this was the loss of jobs: ‘Three-fourths (75%) of survey respondents indicated GenAI tools, software, and/or models had supported the elimination, reduction, or consolidation of jobs in their business division.’

There would likely be new jobs created surrounding A.I.: ‘At the same time, most executives and managers indicate GenAI has already led to the creation of new job titles and roles in their organization. Whether these new jobs will offset inevitable job losses is not clear.’

BBC incorporating Generative A.I. into multiple departments

A recently deleted article about A.I was also published on BBC media centre site (link now unavailable) written by David Housden; the Head of Media Inventory: Digital (leading a Gen AI pilot).

This article was about the BBC experimenting with how they can speed up their marketing department by using text-based generative A.I. combined with ‘human oversight’; to make adjustments if there’s been any errors in what the A.I. delivers.

These were a small number of tests which began with Doctor Who emails and push notifications, and the model was being trained on a dataset of Whoniverse content. However, due to multiple complaints; Deadline reports that the BBC have stopped with their plans to use AI in their marketing to promote Doctor Who. And here is the official complaint response found on the BBC site.

Although, the BBC have stopped with plans to use it to promote Doctor Who, there are still multiple other “Gen A.I. Pilots” which are being rolled out such as equipping ‘journalists with Gen A.I. tools that help them work more quickly.’, ‘more personalized marketing’ and ‘translating content’.

There’s also A.I. voice work which the BBC were recently in the news again for how they handled the firing of an actor, in order to instead use A.I. voice recreations of a person who passed, for a documentary.

How does Generative A.I. work?

Generative A.I has been growing considerably within recent years through publicly available A.I. Large Language Models (LLM) such as OpenAI’s ChatGPT, DALL•E-3, MidJourney and Stability AI’s Stable Diffusion.

It works by typing in text prompts into a search field; there’s not a lot of consistency and quality control with how something could turn out, however typing in a more elaborative prompt will generate a more desired/detailed outcome.

This also depends on if the model is trained on enough content about the subjects requested; for example one of the issues in most models is that somehow there’s never been enough scraped data about hands and this causes generated outcomes of people who have more or less fingers than they should have, or other distorted outcomes.

What are Generative A.I. models trained on?

A majority of the datasets are trained on millions of human-made works (books, text-based languages, articles on websites, images of artworks, photography, audio etc). This is usually done without the consent and compensation of the creator of the works which it was taken from, as well as being trained on copyright free, public domain works.

There are also image and audio based submissions where you can feed the model those files, in order to generate it in other ways, for example this TikTok account is using a site called Jammable (formerly known as Voicify.ai) to generate this “A.I. cover” of Freddie Mercury singing Michael Jackson’s Beat It.

According to the Jammable site; the submissions are trained on your own custom voice models, however this TikToker was clearly able to submit other musician’s works into the models and the ‘custom voice models will take around 1 – 6 hours to train’.

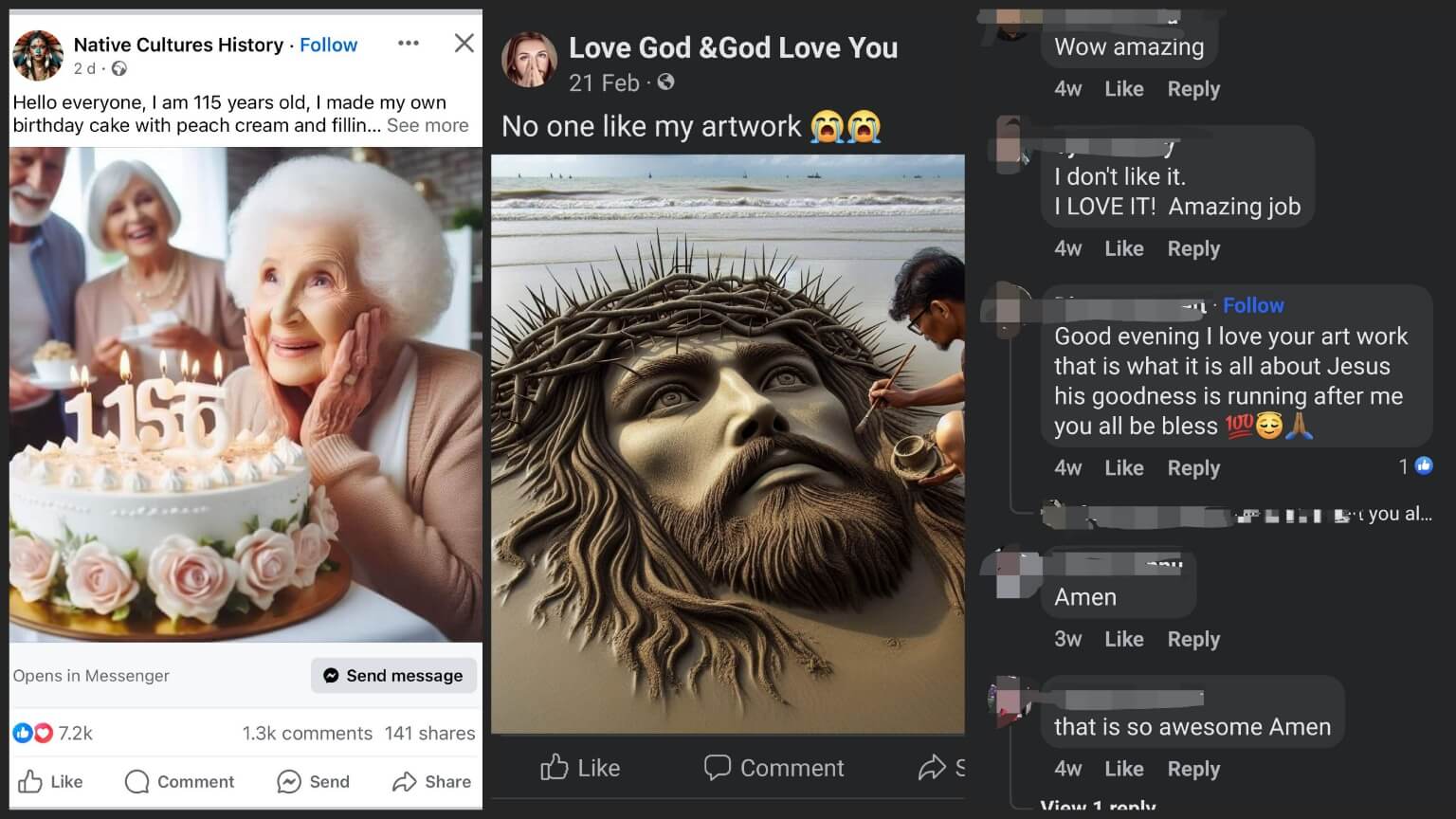

The Jammable site also has A.I. images of cartoons such as Simpsons, Family Guy as well as celebrities and US politicians, including A.I. voice recordings of Joe Biden and a variety of celebrities which could be used to spread misinformation and possible scams being made to unsuspecting people who are not well-informed about A.I., just like it’s fooling people with rampant generated imagery on Facebook.

Deepfake A.I. has also been used as far back as 2017; to fake celebrity images, adult content online, which has caused stress to those who did not consent to it. Most recently when a page was spreading fake explicit A.I images of Taylor Swift. Scientitic American reports that ‘Tougher AI Policies Could Protect Taylor Swift—And Everyone Else—From Deepfakes‘.

Safeguarding against the misuse of prompts

Most models including ChatGPT have been putting blocks on certain word prompts being used to prevent people from asking for abusive, harmful content and now OpenAI seems to block certain names for generating characters protected under Intellectual Property/Copyright laws.

However, there still appears to be flaws with evidence of the models being trained on protected works; as this user was able to bypass the blocked prompts simply by using the Polish word for “Spider-Man” instead.

OpenAI’s plan for Generative A.I. in Hollywood

OpenAI creator Sam Altman arranged a meeting with film studios in Hollywood; by pitching the use of their latest text-to-video model Sora A.I. to generate Hollywood movies.

At the moment Sora A.I. isn’t open to the public, however people were invited to accesss the model to be able to deliver 1 minute videos. There are multiple videos showcasing its capabilities on the OpenAI’s website. This model was built and improved upon using the trial and errors of their previous models such as Dall•E-3 and GPT.

Sora A.I. posted short film called “Air Head” about a balloon man. Director, Walter Woodman mentioned “We now have the ability to expand on stories we once thought impossible”.

However, from looking at this film, this could have already been done without it being generated by A.I. It’s not impossible… a regular studio could have made this with enough time, imagination, skill and money and then this video would have also been copyrightable!

Paul Trillo is one of the other people sharing their experience with using Sora in a second video:

“Working with Sora is the first time I’ve felt unchained as a filmmaker. Not restricted by time, money, other people’s permission, I can ideate and experiment in bold and exciting ways.”. Why would this person state they are finally not restricted by other people’s permissions? As if to imply they are happy to skip getting consent of the original owners’ works which was trained on to be able to generate their video.

A recent ‘Willy Wonka’ event using Generative A.I.

Outside of this is the controversial mess that is the Willy Wonka event in Glasgow on February 24, 2024; which angered parents who took their kids to see something that turned out to be a low-quality event compared to the marketing.

Billy Coull; the person who organized this event had used misleading generated A.I. images to falsely advertise marketing on the website, and background images at the event, in addition to providing actors with nonsense scripts generated by ChatGPT.

After parents called the police, the event was soon cancelled, and this began to cause a viral internet trend about the situation; spawning multiple TV documentaries, various marketing videos, and an upcoming film.

Art communities being affected

Art communities are also being affected where Generative A.I. sellers are approved and actively promoted to quick profits by a company such as devianART; a platform which only gained popularity through the involvement of both amateur and professional artists contributing human-made works to the site for over 20 years.

Two A.I. accounts branded as “top sellers” on deviantART gained around $12,000 and $25,000 using A.I generated imagery (not including any fees which may occur by deviantART). Both accounts (Mikonatai and Isaris-AI) were set up in one year, with the first generating 2.6K images, and the second account with 9.3K images (this comes to 25.47 images being generated per day for 365 days).

What are the Copyright Laws for Generative A.I.?

In 2018, Stephen Thaler attempted to claim copyright on an A.I. generated image based soley on the fact that he owned the machine that generated it. After not being approved by the US Copyright Office; Thaler took the case to federal court. It was determined that the claim “lacked human authorship” as it was created autonomously by the computer and is not subject to be able to copyright it.

Getty recently took StabilityAI to court; accusing them of training models on Getty-owned images, however Stability operates and trains models within the US and claimed the copied works as “fair use”, which isn’t covered as defense in UK law for A.I.

ChatGPT also claims their Large Language Model (LLM) datasets under fair use for training this content.

This fair use is in reference to the 2023 Library Copyright Alliance in the US:

‘Based on well-established precedent, the ingestion of copyrighted works to create large language models or other AI training databases generally is a fair use.’

- ‘Because tens-if not hundreds-of millions of works are ingested to create an LLM, the contribution of any one work to the operation of the LLM is de minimis; accordingly, remuneration for ingestion is neither appropriate nor feasible.’

- ‘Further, copyright owners can employ technical means such as the Robots Exclusion Protocol to prevent their works from being used to train AIs.’

How could Generative A.I become ethical?

The EU A.I. act also mentions this about General-Purpose A.I. (GPAI) models:

‘GPAI system transparency requirements. All GPAI models will have to draw up and maintain up-to-date technical documentation and make information and documentation available to downstream providers of AI systems. All providers of GPAI models have to put a policy in place to respect Union copyright law, including through state-of-the-art technologies (e.g. watermarking), to carry out lawful text-and-data mining exceptions as envisaged under the Copyright Directive. Furthermore, GPAIs must draw up and make publicly available a sufficiently detailed summary of the content used in training the GPAI models according to a template provided by the AI Office.’

‘If located outside the EU, they will have to appoint a representative in the EU. However, AI models made accessible under a free and open source will be exempt from some of the obligations (i.e. disclosure of technical documentation) given they have, in principle, positive effects on research, innovation and competition.‘

As a suggestion for commercial use: models could be open-sourced training models consisting of public domain works, and or the consent of the copyright holders’ own works in order to AI generate something, or the consent and compensation being paid out to the creator of wherever the work is sourced from.

Although, if content creators do intend on giving their consent, it would take a lot of money to compensate someone for their future loss of earnings unless they were getting a lump sum or paid royalties every time their work is involved in an image being generated.

This bizarre competition with applying for a job where it turns out one’s own work mag already have been trained by the company, could also be happening without consent, as creators are not notified of their works being used.

For example; an animator applies for a studio which engages with A.I. models, may already have your own work trained by their models and if you don’t get the job anyway, they still have your work, if it’s been datascraped.

Should unethical A.I. be banned in professional working environments?

If Generative A.I models can’t be ethical, then should the usage of it be banned/unapproved in a professional working environment? Unethical A.I. models are likely never going away completely outside of this for the general public generating images for personal use, the same way piracy still exists.

And would every professional company adopt ethical A.I. models if it meant they had to pay millions or billions to people for the vast amounts of data gathered for their models?

There should always be ways to combat datascraping by having effective tools in place to be able to opt-in or out of your content being used. Tools like this do exist on some sites, and there is code to implement in the Robots.txt files of your website, in order to prevent ChatGPT from crawling it.

What’s to stop companies from training on the works of creators and then never employing them again?

A suggestion would be to have some form of licensed limit to the use of works trained for these models. The models shouldn’t be transferred to multiple companies unless there is consent and compensation for the creator(s) or their estates that the work is trained on. Otherwise the person’s own works trained on A.I could be competing with themselves to work for other companies in the same field, meaning this could lead to loss of future work for the person or others being without future work anyway.

Another suggestion could be to bring future changes to employment laws; where a company has to employ a percentage of people alongside companies adopting the use of A.I in the same field.

Employment Law about A.I.

For now, the current research briefing of Artificial Intelligence and Employment Law from the UK Parliament states:

‘There are currently no explicit UK laws governing the use of AI and other algorithmic management tools at work.’

In the new approved update EU Legislation document for the Artificial intelligence act; the transparency risks state:

‘Providers of AI systems that generate large quantities of synthetic content must implement sufficiently reliable, interoperable, effective and robust techniques and methods (such as watermarks) to enable marking and detection that the output has been generated or manipulated by an AI system and not a human. Employers who deploy AI systems in the workplace must inform the workers and their representatives.’

Overall there doesn’t appear to be enough done to protect Human content creators from having their works trained on these models.

Is there room for Generative A.I to co-exist with human-made work in industries?

Currently, it’s going to be a matter of ethics, regulation, copyright, quality over quantity, and the ability to have complete creative control over original creations involving human-made work compared to using generative A.I.

A.I as a tool to assist humans with editing the tedious aspects of human-made works has mostly been accepted in co-existing for sometime now, as this doesn’t automate entire works.

It does also set an unfair advantage in the amount of work that can be delivered and it’s not likely to be good for job security. A rise in Generative A.I. isn’t exactly going to be improving employment rates which are increasing.

Also, what happens when more and more companies start to favour and hire people who can bulk generate 1000s of content per day, over skilled creative people spending time on a few original human-made works over a longer duration?

Situations like this have already been happening, when artist, Ron Ashtiani was on a call with someone interested in their work to hire them, Ron had requested concept artists to help with the job which could take 4 months, only to be told that the company is using A.I. for everything.

And what happens when companies consisting of human-made works are constanstly being outbid by A.I. companies who partially or fully A.I. generate feature length movies, tv shows or advertisements instead; which could be potentially replacing entire productions of workers.

As well as more publications and blog sites generating articles by A.I.; leading to the potential loss of human jobs for writers.

The Guardian reports that the worst case scenario just in the UK alone for many aspects of A.I. could lead to the loss of 8 million jobs becoming automated. All of these people possibly losing their jobs here as well as globally, unless workers are protected against this exploitation.

Leave a comment